CloverDX Resources

Find information, guides and content about CloverDX, including release notes, documentation, links to learning resources, and white papers.

CloverDX documentation & Release notes

|

CloverDX 7.3 |

|

|

CloverDX 7.2 |

|

|

CloverDX 7.1 |

|

|

CloverDX 7.0 |

|

|

CloverDX 6.7 |

|

|

CloverDX 6.6 |

|

|

CloverDX 6.5 |

|

|

CloverDX 6.4 |

|

|

CloverDX 6.3 |

|

|

CloverDX 6.2 |

|

|

CloverDX 6.1 |

|

|

CloverDX 6.0 |

|

| CloverDX 5.17.3 Documentation |

|

| CloverDX 5.16.2 Documentation |

|

| CloverDX 5.15.4 Documentation |

|

| CloverDX 5.14.3 Documentation |

|

| CloverDX 5.13.4 Documentation |

|

| CloverDX 5.12.4 Documentation |

|

| CloverDX 5.11.5 Documentation |

|

| CloverDX 5.10.4 Documentation |

Learning resources

|

A video series in the CloverDX Academy to help you get up and running with CloverDX. Build your first data transformations, learn about automation, and more. |

|

Log in to submit support tickets, download new versions and access licences |

|

Questions and answers on how to solve problems in CloverDX |

|

How-tos, articles, announcements and advisories |

|

The latest posts on all things data on the CloverDX blog |

|

Details and release notes for current and previous versions of CloverDX |

Running a Healthy CloverDX Server II: Troubleshooting

Running a Healthy CloverDX Server I: Configuration Best Practices

What's new in CloverDX 7.3

What's new in CloverDX 7.2

The blueprint for scalable and efficient data ingestion

What's new in CloverDX 7.1

Guides & white papers

How Faster Data Onboarding Fuels Customer Acquisition and Growth

Cracking the Build vs Buy Dilemma for Data Integration Software

A Guide to Migrating Data Workloads to the Cloud

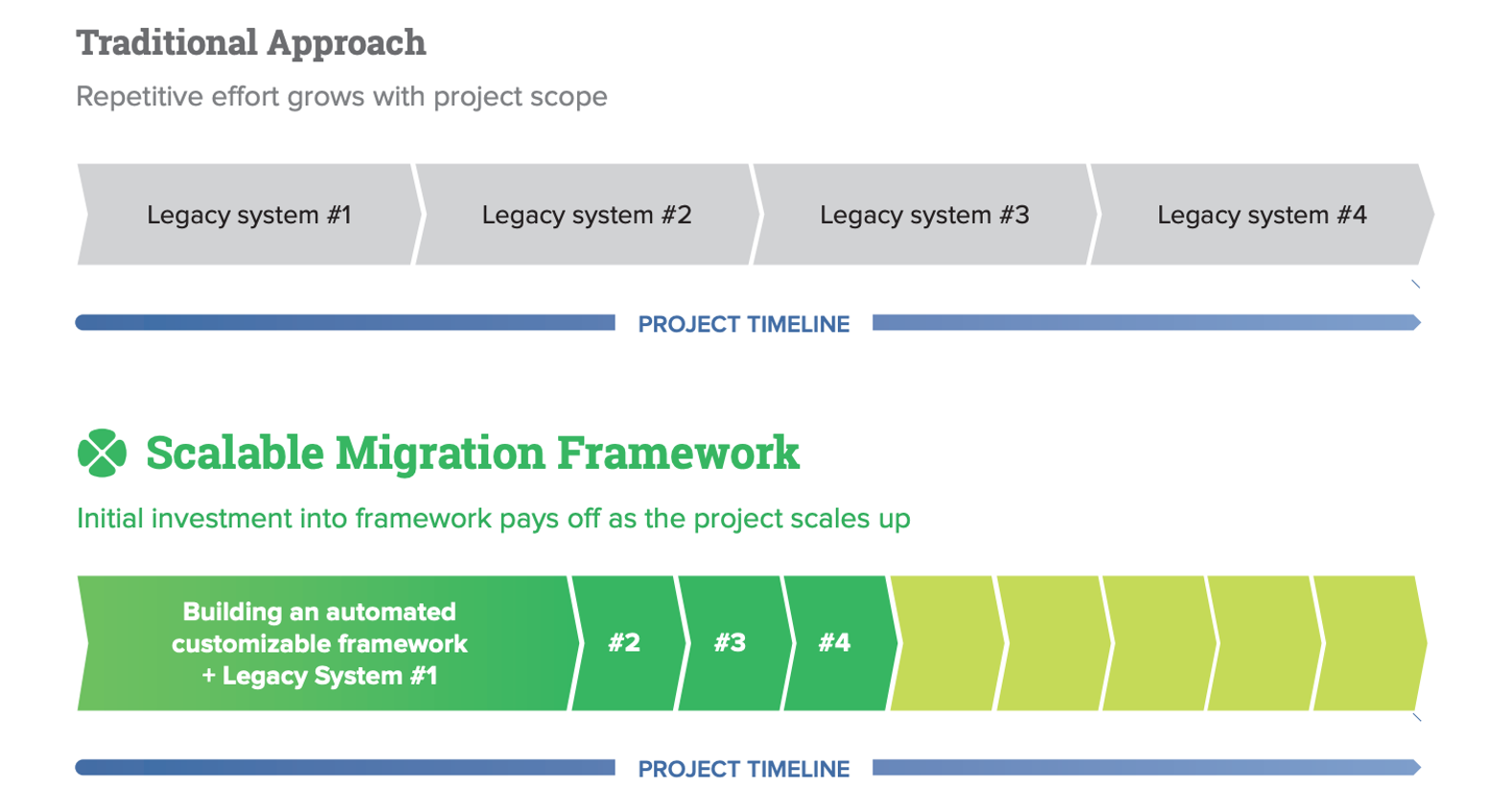

Building Large Scale Data Migration Frameworks

How to Bridge Data Models and IT Operations for Simpler Compliance

Buyers Guide to Data Integration Software

Architecting Systems For Effective Control Of Bad Data

Your Guide to Enterprise Data Architecture

Designing Data Applications The Right Way